|

I’m a fifth-year Ph.D. candidate in the Intelligent Systems Program at the University of Pittsburgh, jointly advised by Kayhan Batmanghelich (BatmanLab, Boston University) and Shyam Visweswaran (VisLab). My research lies at the intersection of machine learning, medicine, and human–AI collaboration—developing algorithms that make AI systems safer, more interpretable, and clinically reliable. Broadly, I study how AI models behave under uncertainty and how they interact with human expertise in high-stakes decision making. My work spans from understanding model failures such as shortcut and spurious learning to designing hybrid human–AI systems that integrate algorithmic predictions with human judgment for expert-level performance in healthcare applications.

“When should AI decide, and when should it defer?

How can human expertise be modeled and trusted in the loop?” My broader goal is to advance AI systems that understand their limitations and collaborate effectively with humans—especially in domains where reliability and safety are non-negotiable. I was a visiting researcher jointly collaborating with the Machine Learning Research Group (MLRG) at the University of Guelph (Ontario, Canada) and the University of Toronto, advised by Prof. Graham W. Taylor and Prof. Joel D. Levine, on computer-vision models for biological data. Previously, I interned at the Robotics Research Center (RRC) at the International Institute of Information Technology, Hyderabad, where I worked with Prof. Madhav Krishna and Dr. Krishna Murthy on robotics and perception. I received my M.E. in Software Systems and B.E. (Hons.) in Electrical and Electronics Engineering from BITS Pilani, where I was advised by Prof. Surekha Bhanot. Email / CV / Google Scholar / GitHub |

|

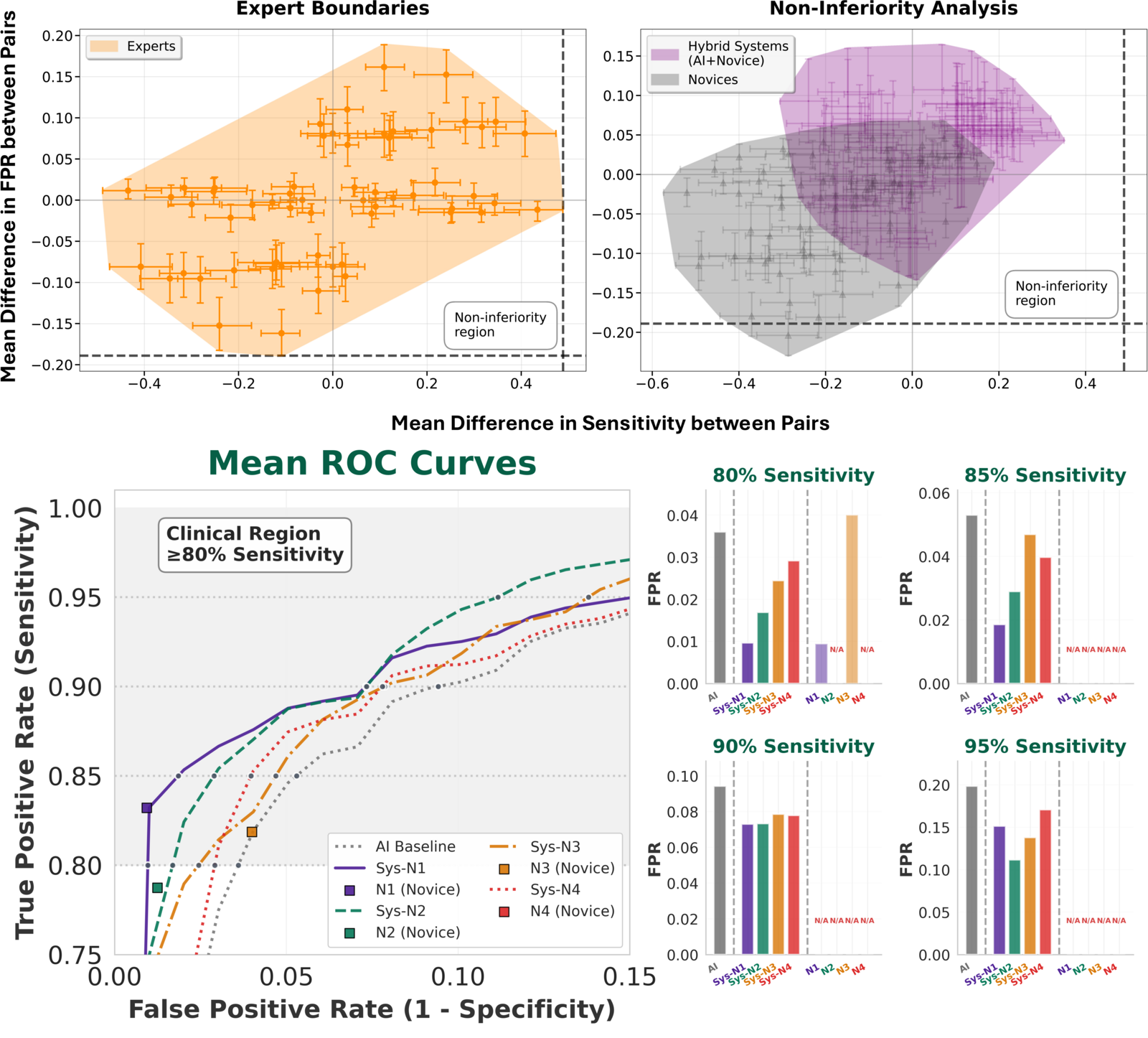

(in progress) Developing hybrid human–AI systems that enable reliable intraoperative EEG monitoring even in settings with limited expert availability. |

|

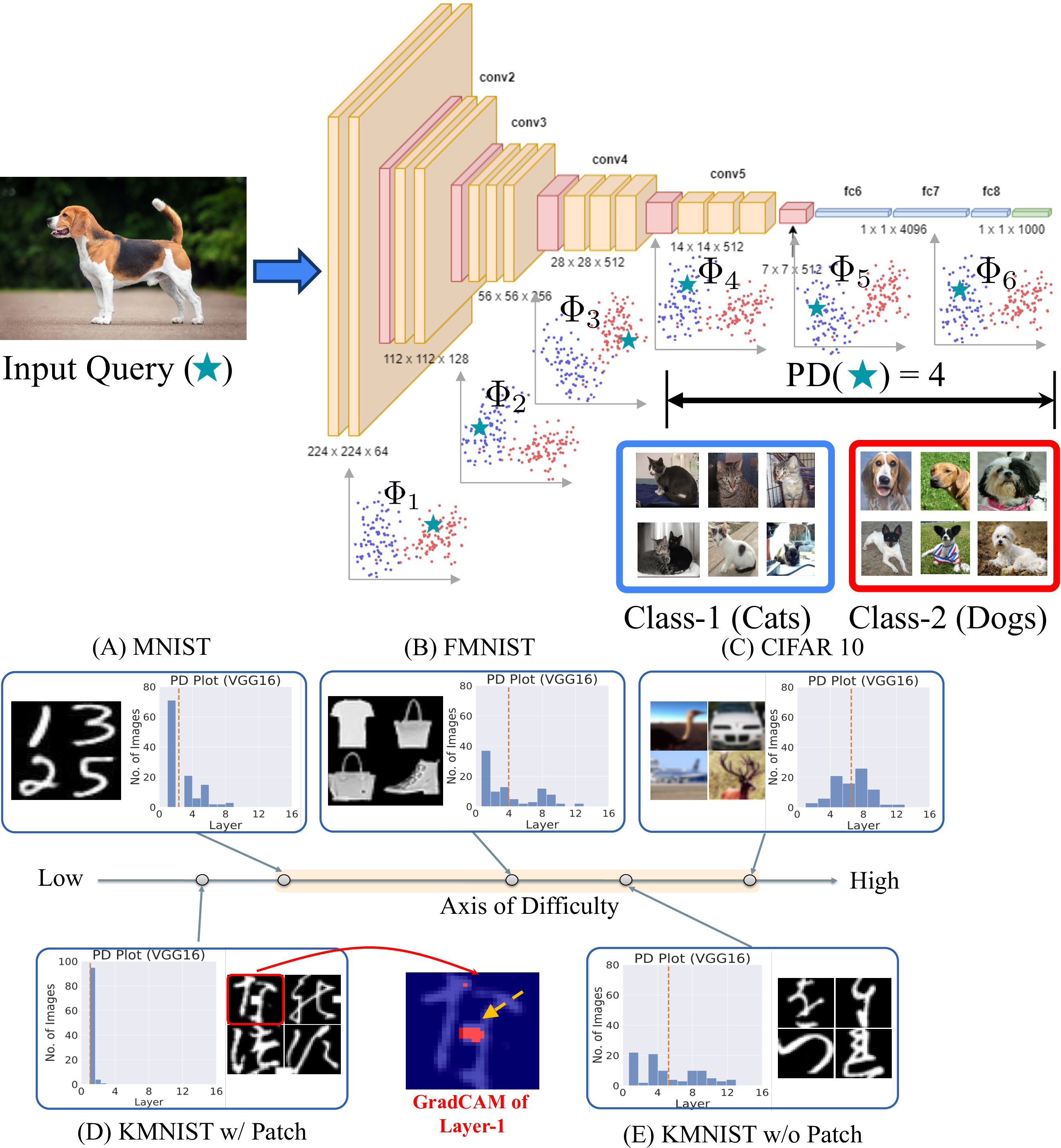

Nihal Murali, Aahlad Puli, Ke Yu, Rajesh Ranganath, Kayhan Batmanghelich TMLR 2023, ICMLW 2023 Paper / Github / Video / Poster / Slides / Talk Investigates how neural networks learn spurious features during training and shows that monitoring early-layer dynamics can reveal harmful shortcuts before deployment. |

|

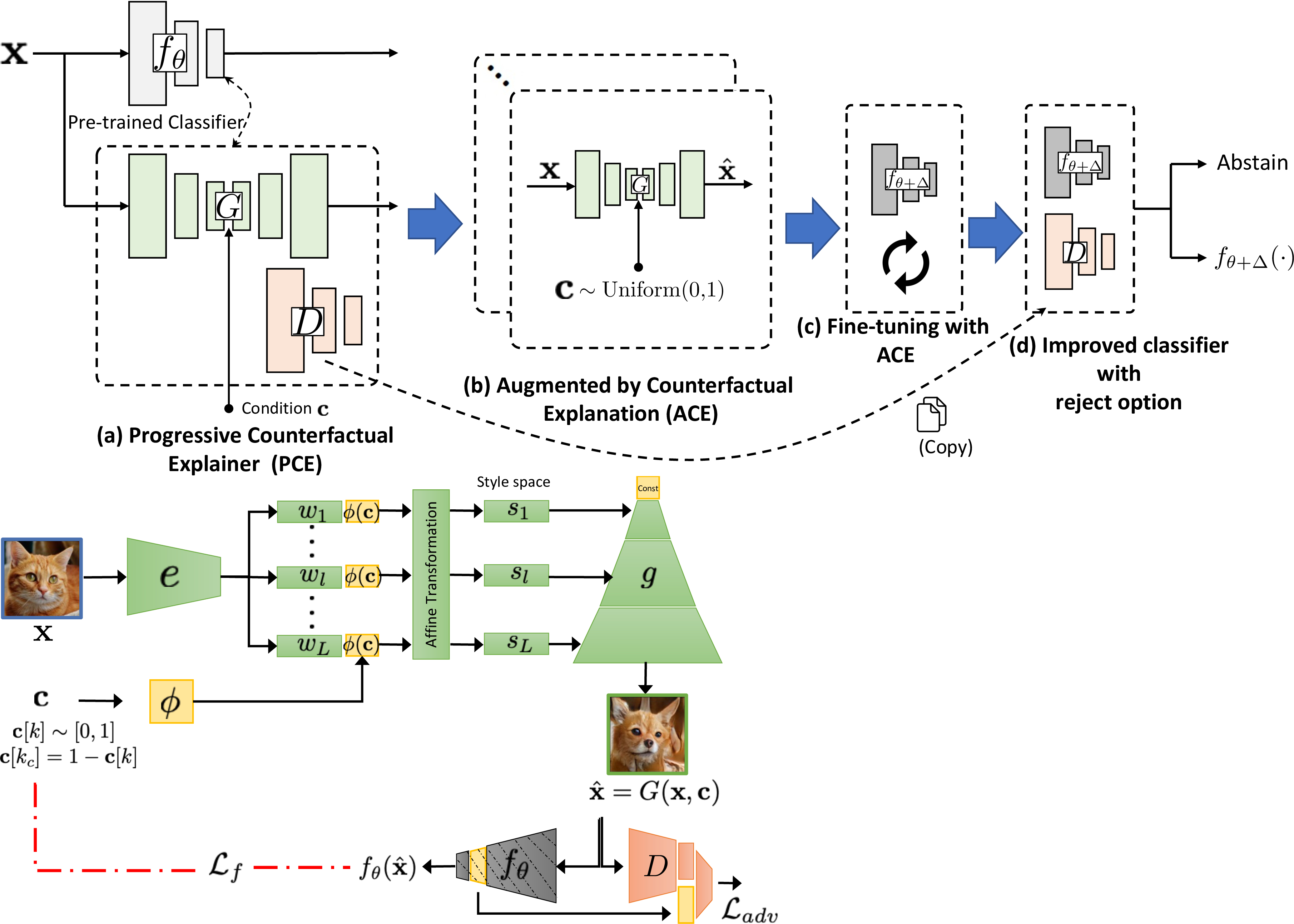

Sumedha Singla*, Nihal Murali*, Forough Arabshahi, Sofia Triantafyllou, Kayhan Batmanghelich WACV 2023 *Equal contribution Paper / GitHub Proposed a counterfactual-based fine-tuning method (ACE) that uses GAN-generated augmentations to reduce overconfidence and improve uncertainty estimation in deep neural networks. |

|

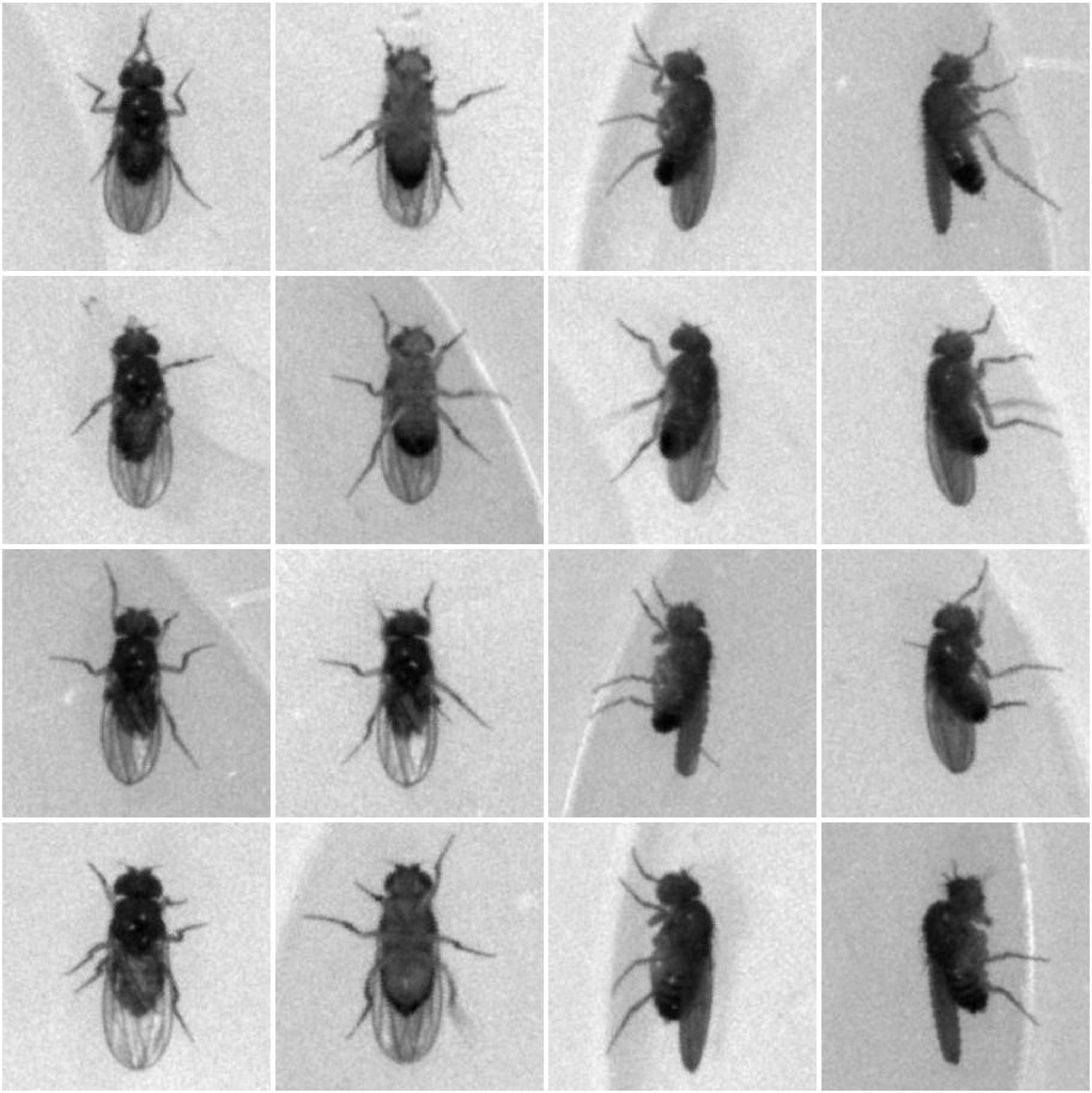

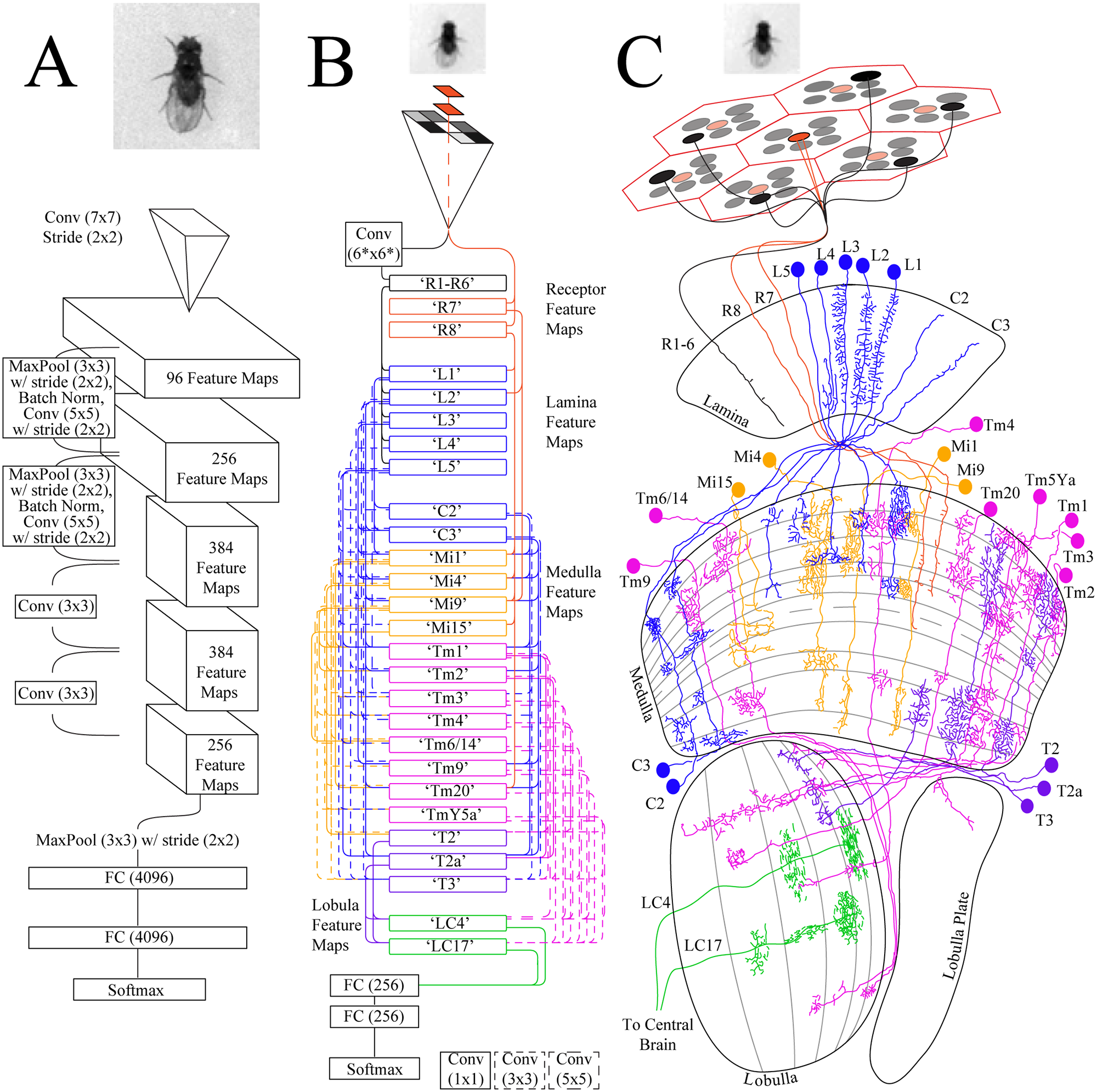

Nihal Murali, Jon Schneider, Joel Levine, Graham Taylor WACV 2019 Paper / GitHub / Dataset Developed a CNN-based method to maintain individual fruit fly identities across days, enabling long-term behavioral tracking without physical tagging. |

|

Jon Schneider, Nihal Murali, Graham Taylor, Joel Levine PLOS ONE 2018 Paper / News Demonstrated that individual fruit flies possess unique visual features detectable by deep convolutional networks—suggesting visual discrimination among conspecifics despite low-resolution vision. |

|

Bachelor’s Thesis, BITS Pilani Developed a deep learning system using ResNet architectures to automatically recognize and re-identify individual Drosophila melanogaster across days—eliminating manual tagging and enabling long-term behavioral experiments. |

Template taken from Jon Barron